By Minco Staff

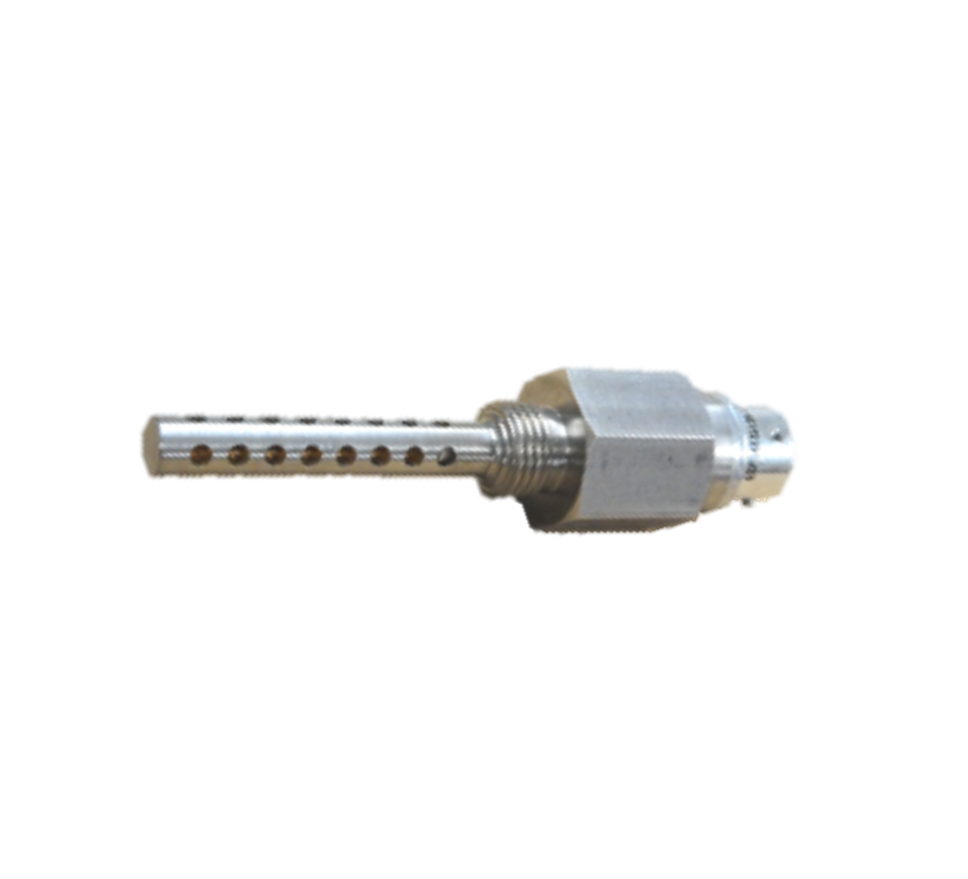

An important performance characteristic of temperature sensors is response time. That is, how quickly a temperature sensor measures change in temperature. Response time is described in terms of a time constant — which is the time necessary for a temperature sensor to respond to a 63.2% step change in temperature. Sensor response time is an important performance parameter that is usually obtained by test measurements.

Often engineers test this step change by rapidly inserting a sensor at room temperature into heated water or oil. Even though most temperature sensors do not experience an instantaneous step change in temperature during actual use, the time constant is useful for comparing the relative performance of different sensor types or analyzing variation within a production batch.

The most important consideration from the time constant theory is that the farther the part geometry deviates from a simple lump of uniform material (and the more thermally resistive it becomes), the less constant the “time constant” is going to be.

For a rigorous approach, all sensor outputs should be converted to temperature before calculating the time constant. Practically, inputs that are linear or close to linear with temperature can be used.

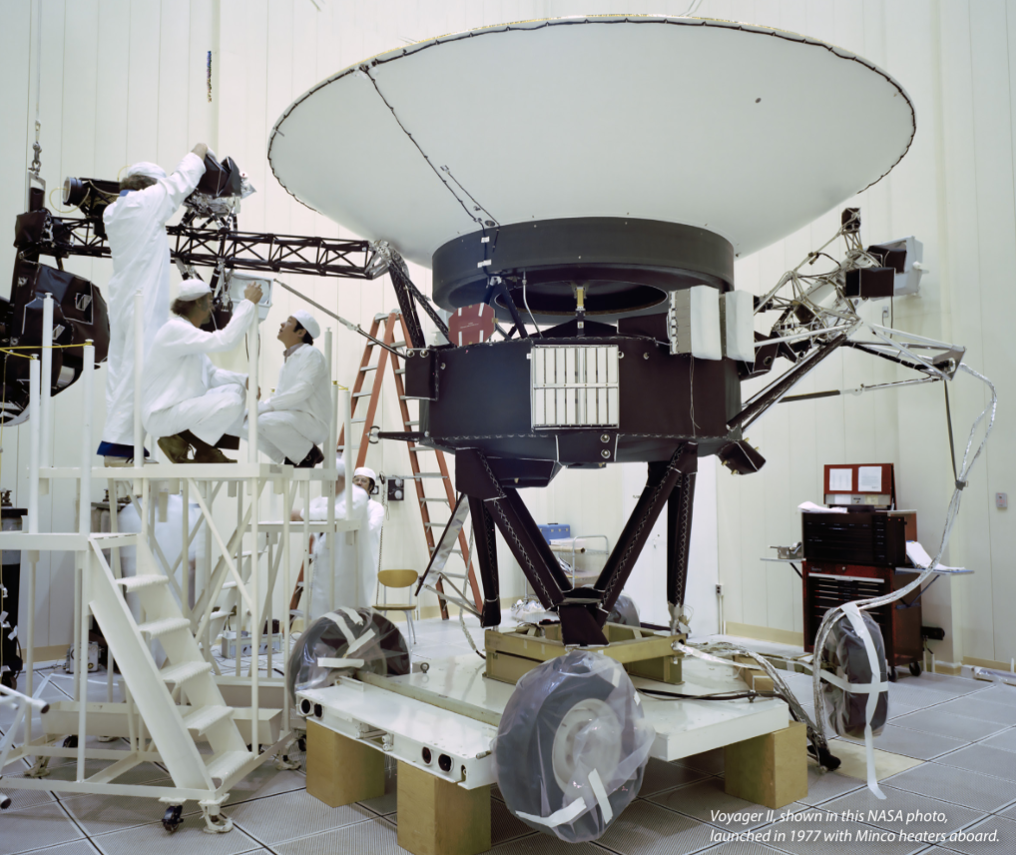

Unfortunately, standard test methods rarely match the heat transfer conditions of actual use and the published results do not always honestly convey the real-life performance of a sensor. Whether lower (most often) or higher response time is required, it is critical that a given temperature sensor design has a consistent and accurately measured response time. This will ensure consistent and reliable performance across all sensors used in an application.

Want to learn more about how to about managing response time for temperature sensors within your application? We dig deeper in our newest whitepaper available here.